A leaked Commission draft for a so-called “Digital Omnibus” would undermine core GDPR protections under the guise of “simplification.” It would narrow the scope of what constitutes personal data, curtail data-subject rights, create AI-specific carve-outs (even for sensitive data), and relax device-level protections currently anchored in the ePrivacy regime. If adopted, this would materially weaken the tools citizens — and fraud victims — rely on to get evidence, stop abuse, and obtain redress.

What just happened?

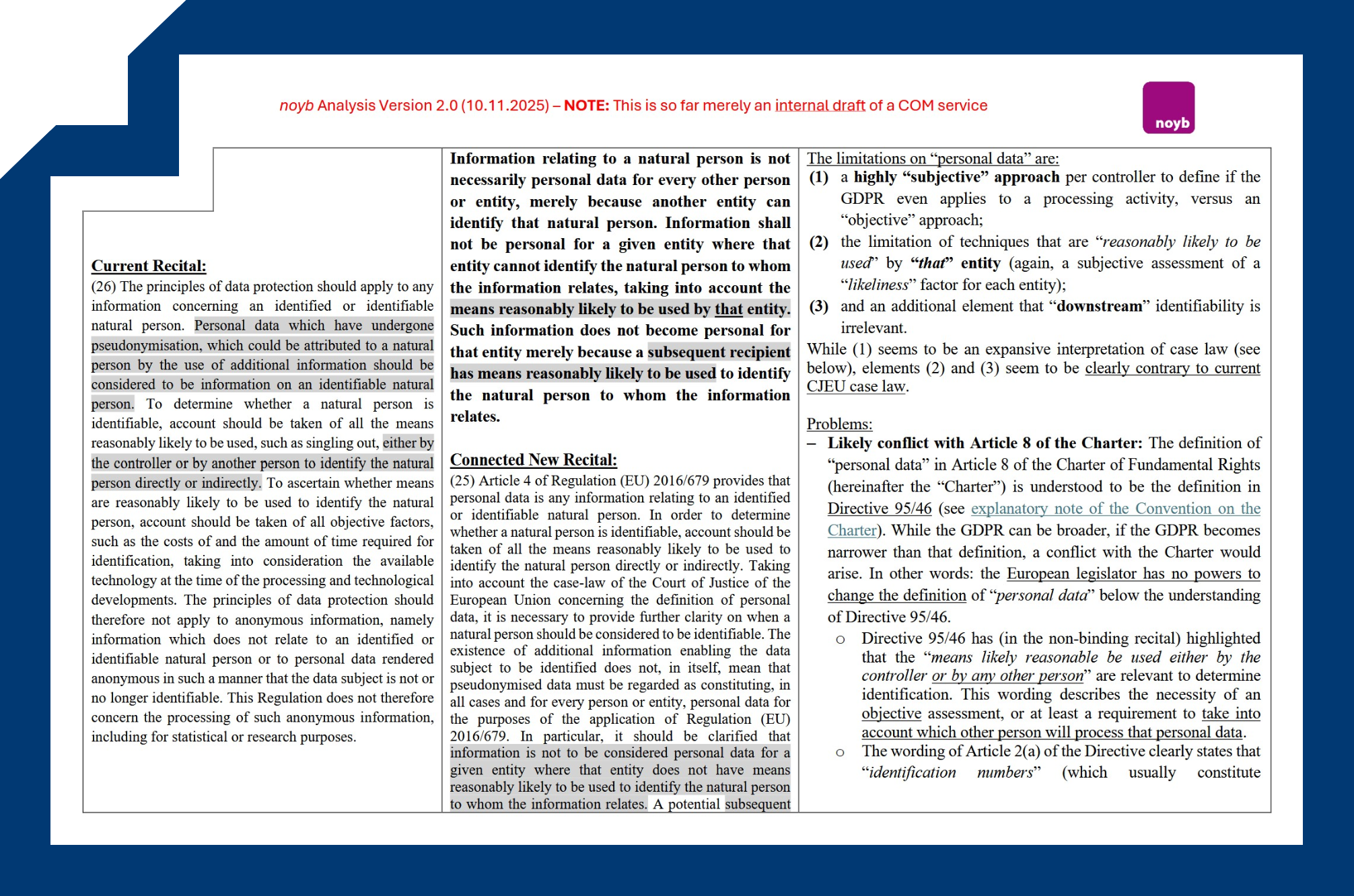

On 10 November 2025, noyb published an analysis of an internal Commission draft suggesting far-reaching amendments to the GDPR via a “Digital Omnibus.”

Leaked working papers indicate that most Member States requested targeted tweaks at most, while Germany circulated a detailed paper urging broader changes — including exclusions for “low-risk” processing — that go beyond routine simplification.

What’s on the table — in plain terms

1) Redefining “personal data” through a subjective lens.

The draft would move toward a controller-by-controller test for identifiability (“means likely to be used by that entity”), which could result in pseudonymous IDs (such as ad IDs and cookie IDs) falling outside the GDPR for many players. That clashes with CJEU case law, which takes an objective, practical-means view and has long treated online identifiers as personal data. This would upend 20+ years of jurisprudence.

2) Gating data-subject rights behind “data-protection purposes.”

Access, rectification, and erasure would be usable only for narrowly defined “data-protection” aims. In practice, employers, platforms, or PSPs could refuse access requests used to gather evidence for wage claims, consumer disputes, or litigation. That contradicts the CJEU’s rulings: the context or purpose of an Article 15 request “cannot influence the scope of that right.

3) An AI “wildcard” in Articles 6 and 9.

The draft would let controllers process personal data — including special categories in certain circumstances — for AI training and even for the operation of AI systems, with only vague safeguards and an impractical “opt-out.” That flips the GDPR’s technology-neutral design and risks normalising large-scale ingestion of citizens’ data by default.

4) Raising the breach-notification bar.

The duty to notify authorities would shift from any “risk” to only high risk, aligning the threshold with data-subject notification and ensuring that many incidents never reach regulators. That is a sizeable compliance holiday with obvious downstream harms for users.

5) Process & politics.

Parliament and Council would still have to debate the text, but the chosen vehicle — an omnibus “simplification” file — shortcuts the usual depth of impact assessment for such a sensitive field. Meanwhile, the Commission’s digital portfolio is led by Executive Vice-President Henna Virkkunen, under whom services have driven the package.

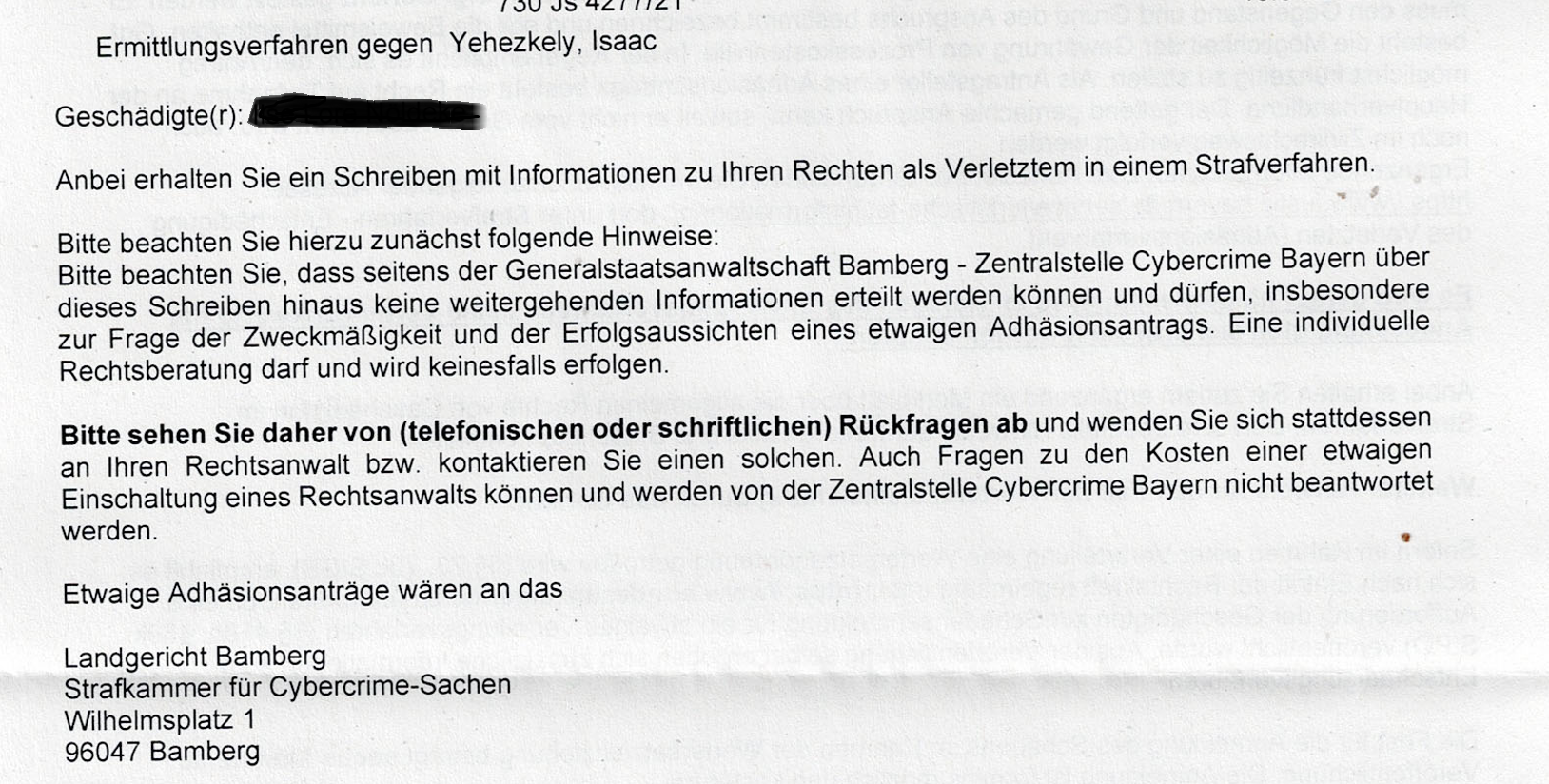

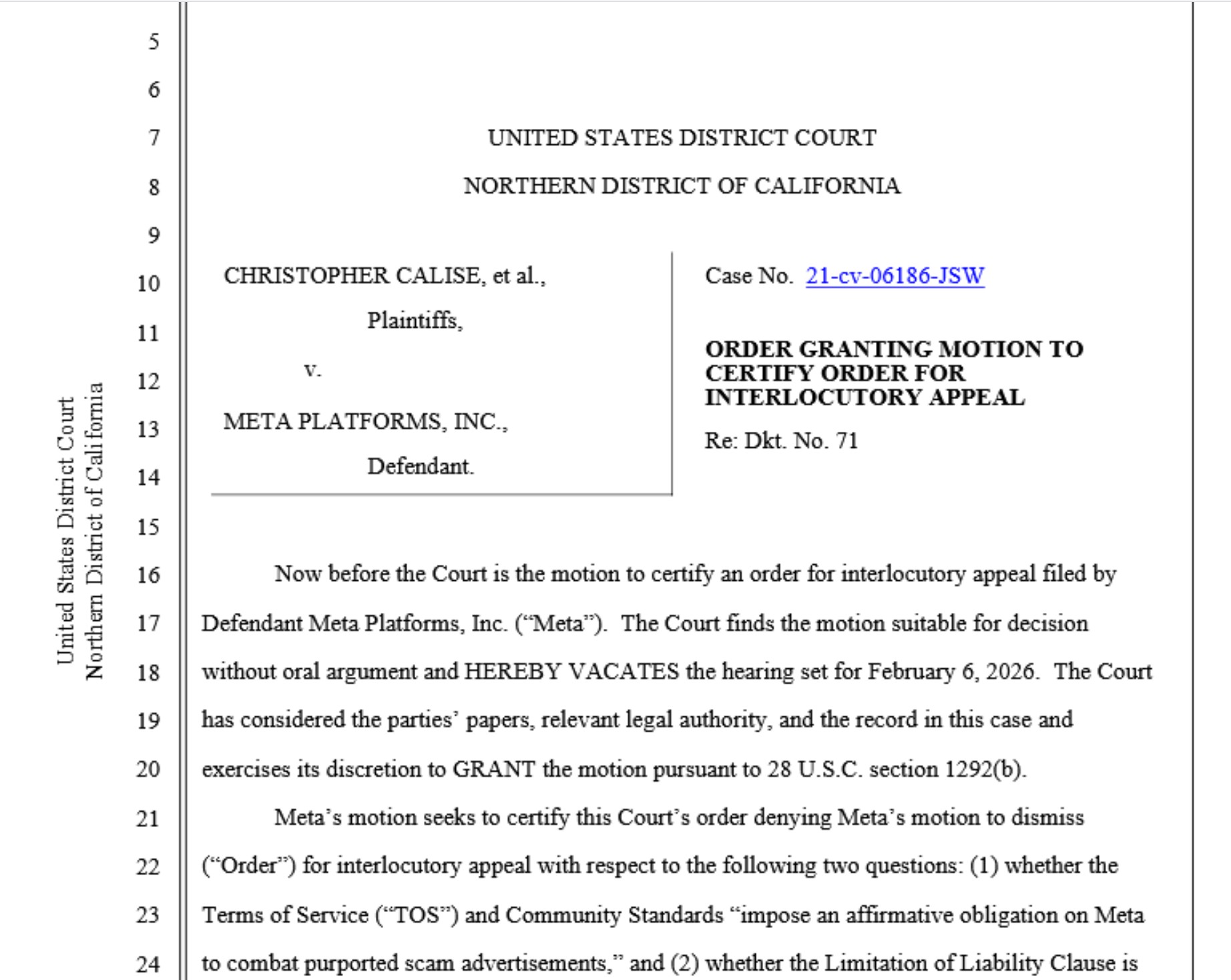

Why this is disastrous for fraud victims

Evidence gathering would be crippled. Victims and their representatives routinely invoke Article 15 of the GDPR to obtain logs, risk flags, chargeback metrics, KYC/AML markers, IP addresses, device IDs, IDs and internal notes from platforms, PSPs, and ad brokers — precisely to prove fraud chains and enable civil claims. A purpose-limited right of access would hand enablers a ready-made refusal template.

Pseudonymous tracking would get a lifeline. Narrowing “personal data” for controllers that claim not to be able to re-identify individuals would entrench the ad-tech status quo (IDs everywhere, accountability nowhere). As investigative reporting has shown, “pseudonymous” ad data can be weaponised — including against EU officials. Legalising that retroactively is the opposite of consumer protection.

AI carve-outs invite bulk ingestion of victims ‘ data. An open-ended lawful basis for AI training/operation on scraped or brokered datasets — with only theoretical opt-outs — means victims’ posts, reviews, complaint narratives, and leaked records can be absorbed and resurfaced without meaningful control. That normalises the very data flows used to micro-target and re-victimise consumers.

This is not “SME relief.” It’s structural deregulation.

Member-state feedback shows broad support for targeted administrative relief and better enforcement tools — not a reopening of the GDPR’s core definitions or rights. Germany, by contrast, pushed a two-stage reform agenda and even floated exclusions for low-risk processing. The leaked draft tracks those maximalist ideas far more than any balanced SME agenda.

EFRI’s position

Strip the GDPR surgery out of the Omnibus. Any change to core terms (personal data, special categories) or to fundamental rights (Articles 15–17, 21) must follow a full-fat legislative process with a proper evidence base — not a “simplification” vehicle.

No AI-specific carve-outs. The GDPR is technology-neutral by design. If AI needs data, it must satisfy the same lawful-basis tests as everyone else — especially for Article 9 data.

Keep device protections intact. Article 5(3) ePrivacy is a floor, not a ceiling. Consent for device access should remain the rule, not morph into multi-purpose “legitimate interests.”

Preserve purpose-agnostic rights. Access and rectification rights must remain usable for any legitimate aim — including litigation, journalism, or consumer redress — as confirmed by the CJEU.

Enforce the GDPR we already have. The problem is under-enforcement, not over-protection. Raising breach thresholds and blessing pseudonymous tracking will only widen the impunity gap

Request: Firewall Lawmaking from Big Tech Lobbying

Big Tech firms deploy some of the largest lobbying budgets in Brussels—vastly exceeding the resources of consumer organisations and even many regulators. That financial firepower must not translate into privileged access, text authorship, or de-facto veto power over citizens’ fundamental rights under Articles 7 and 8 of the Charter.

Our requests

Impose a moratorium on all non-public meetings, “non-papers,” and informal drafting input from regulated Big Tech firms and their paid intermediaries for any text that would amend GDPR/ePrivacy or data-rights safeguards.

Full provenance disclosure for every edit: publish, with track-changes, the author and origin of any wording introduced into draft texts after 1 September 2025; reject text whose authorship cannot be verified.

Transparency parity: for each Big Tech contact logged, ensure equal, documented consultations with data protection authorities, consumer groups, and civil society experts.

No secondments or revolving-door influence: suspend industry secondments and require recusals where prior employment or ongoing financial ties create conflicts.

Keep device-level and rights protections out of “simplification” files: any change to core definitions (personal data, special categories) or to data-subject rights must undergo a full, stand-alone legislative process with an impact assessment and public consultation—not through omnibus shortcuts.